Soon its time for carnival! I have noticed that a lot of German hits are played in Dutch pubs. Because of the similarity of these two languages, I have got often asked whether it is a German or Dutch song. Famous Dutch and German songs are often translated into the other language. This results in an exciting question of how a neural network distinguishes these two music genres. Because it can not directly learn from the music rhythm but must analyze the words inside the song, so it has to filter out the rhythm somehow.

Collect Data

In this little experiment, I wanted to use songs, which are available in both languages. So my first task was to collect these songs. I ended up with the following table.

Data Preprocessing

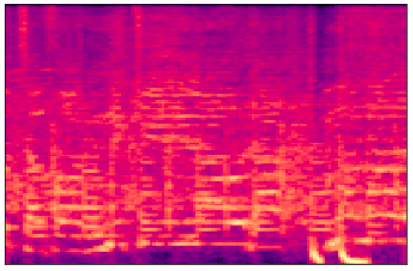

I split each song into 3 seconds chunks and converted each chunk into a spectrogram image. These images can be used to identify spoken words phonetically. Each spectrogram has got two geometric dimensions. One axis represents time, and the other axis represents frequency. Additionally, a third dimension indicates the amplitude of a particular frequency at a particular time is represented by the intensity or color of each point in the image. I ended up with 2340 training images (1170 images for each class) and 300 validation images (150 images for each class). For the preprocessing step I used my jupyter notebook.

Neural Network

I use transfer learning to mainly decrease the running time of the training phase. I chose the RESNET with 18 layers. You can find my code in my GitHub-Repository.

Results

I achieved a training accuracy of 83% and a validation accuracy of 80% in 24 epochs. A long time ago I developed already a music genre classification for bachata and salsa, here I reached a training accuracy of 90% and a validation accuracy of 87% as far as I remember.